What is your view of economic dynamics and what are the important questions in this area?

Economic dynamics - I must say that I was influenced greatly by the dynamical way of looking at things by Richard Goodwin. He emphasised non-linearity, but also looked at linear dynamics. First Richard Goodwin and then Bjorn Thalberg in Lund also emphasised dynamics. … Economic dynamics was associated for me with non-optimum cycle models and this was what Goodwin emphasised all the time..... Economic dynamics was a vehicle through which I was able to see the non-maximum allocation processes. That was important for me because dynamics was a tradition I came with from my engineering studies in mechanical engineering, particularly Hayashi’s lectures on oscillation theory and aerodynamics lectures on vibration theory. They were essentially dynamic things. I specialised in hydrodynamics, quantum dynamics, electrical dynamics, all kinds of dynamics, and it was a heartening experience to see that it was important in economics as well, but I was puzzled as well that these dynamical questions did not relate to the dynamics of engineering systems apart from electrical oscillation theory.…Anyway, economic dynamics meant for me a way to bring in non-optimum dynamical questions into it, but it is only recently I have been able to analyse this.

You seem to argue that there are varieties of mathematics in your writings. What are these varieties and do economic insights depend crucially on the kind of mathematics that we choose?

Well, first of all I can answer by saying I don’t think there is a right kind of mathematics. I don’t think orthodox economists, conventional economists, know anything of the varieties of mathematics. ... Economists do not differentiate enough between finite arguments and infinite arguments. Accuracy depends on whether you are talking about infinite or finite. Approximation depends on whether you are talking about finite or infinite. Then it comes to the definition of infinity.…That is why I think that one should know one branch of mathematics well, being aware of many other branches of mathematics that are possible. I will just mention smooth infinitesimal analysis, non-standard analysis, Russian constructivism, intuitionist constructivism. You can read ordinary mathematics in any number of books, but these things you can still read the classics and understand. That means Bishop, Brouwer, Turing and so on.

Could you explain its scope and the motivations behind computable economics in a way that relates to understanding and investigating economic problems?

Computable mathematics – intuition is important, imagination is important and the intuitionism of Brouwer is important. Approximations are important, algorithms are important...My computable economics was going towards problem solving, but in a classic computability sense it was about showing the impossibility of solving the problems of standard economics instead of showing them as paradoxes and anomalies, as Allais and Ellsberg did in the beginning. For example, Savage’s book says, ‘I thank Allais and Ellsberg for pointing this out and now I correct my subjective expected utility statistics,’ but he never corrects finite versus infinite, continuous versus non-continuous and so on.

The “agents as problem-solvers” view or metaphor seems to be in contrast with agents are optimisers, maximisers or signal processors. Why is problem-solving a better metaphor in your view?

Problem solving is important because that is what we have in economics and social science: problems. We have to solve them. We find methods to solve them and these methods, if they are tied to the straitjacket of rationality, equilibrium and optimisation, we can only solve a few of the problems. We have to solve the problems anyway so we have to expand the methods and expand the concepts of standard economics.…You develop the mathematics to solve the problems. You don’t take the mathematics as given and then tailor the problem to suit the mathematics, to suit the solution of the mathematical principle. You tailor the mathematics. ... Standard economics emasculates problems by the mathematics that they know or mathematics that is already developed. The danger is they will apply it, like the computational economists do, like the agent-based economists do. They will take constructive mathematics as it is and apply it to computational economics and to interpret what they call agent-based economics, whereas mathematicians expand this all the time due to real problems.… I think problems are intrinsic to economics and social science in general. Agents individually and collectively try to solve problems. They try to mathematise it, but they develop the mathematics. Sometimes we learn about it, sometimes we don’t learn about it.

You make a distinction between classical and modern behavioural economics in your writings. Could you elaborate on what this distinction is?

Classical behavioural economics is the kind that Simon, Nelson and Winter, and Day and that kind of people emphasise. Modern behavioural economics is the kind that comes out of the acceptance of subjective expected utility theory. That means you accept subjective probability theory of the Savage variety, the resulting subjective expected utility. None of this is accepted by the classical behavioural economists. They accept the probability theory of algorithmic probability theory, which is wedded to von Mises’s definition of patterns for randomness first and probability afterwards. Randomness is patternless; Patternless is randomness. Patternless is defined algorithmically. These people are completely algorithmic in their definitions of behavioural entities and their decision processes for solving problems. The modern behavioural economists who accept subjective expected utility and therefore subjective probability do not accept an algorithmic definition of probability theory or utility theory so they have to face Allais paradoxes and Ellsberg paradoxes which they solved logically – classical logical way. This tradition names the paradoxes as anomalies and goes on through experimental economics and modern behavioural economics of the Thaler variety. They accept all the tenets of neoclassical economics as regards utility maximisation and equilibrium search is concerned. None of this is accepted by classical behavioural economists. That is the distinction.

What would non-equilibrium or a-equilibrium economics look like in that case?

I prefer not to have any equilibrium concept. The classical behavioural economists did not work with any equilibrium concept. They defined non-equilibrium, but I don’t work with any equilibrium at all and computability theory has no equilibrium at all. An algorithm either stops or doesn’t stop. It may not stop. Many algorithms don’t stop at all... I don’t want to use the word equilibrium or non-equilibrium or a-equilibrium, without equilibrium. Economics is algorithmic. Algorithms have no equilibrium concepts. They have stopping rules. They are made from approximations of intuitions. That is enough for me.

What is the place for specific historical and institutional elements in this framework?

History is very important, but not in the sense that Joan Robinson talks about history versus equilibrium. She talks about history in an ahistorical sense: history is given. History is not given. We reinterpret history all the time, so we reinterpret intuitions all the time because we reinterpret history all the time. History is important and study of history is important. Knowing history is important. The extent to which you know history and you are a master of history, you can talk about intuitions approximated to algorithms. Algorithms is history dependent.

In your work you put Keynes, Sraffa, Simon, Brouwer, Goodwin and Turing together. What do you see as a single connecting thread?

You talk about ASSRU (Algorithmic Social Science Research Unit) really because in that we put together Simon, Turing, Brouwer, Goodwin, Keynes and Sraffa. This is about computability, bounded rationality, satisfising, intuitionism and then the irrelevance of equilibrium in Sraffa’s work and Goodwin’s cycle theory and Keynes’s overall view of the importance of morality in daily affairs, if you like.

You spoke about varieties of mathematics and you touch upon many different traditions and varieties of economics.

I think there are only two types of economics, but there are many types of mathematics. Finite, but many types… only two types of economics: classical and neoclassical. The neoclassical model requires full employment, equilibrium, rationality and all the paraphernalia of this. Classical methods do not require any of these concepts. There can be unemployment, there can be no equilibrium and so on.… When I teach, I teach students first of all to have an open mind; secondly, to master one kind of mathematics and one kind of economics, but with an open mind to be sceptical about what they master. Scepticism is the mother of pluralism in my opinion. I don’t teach pluralistic economics, I don’t advocate pluralism, but I advocate scepticism, which is one step ahead or above pluralism. To be sceptical of economics and mathematics is a healthy attitude. To be sceptical in general is a healthy attitude. It is because some of these people were sceptical of conventional modes of thought that they were able to extend, generalise and use other types of thought and way of reasoning and so on. This is true of the people like Brouwer and this is true of economics people like Keynes. Keynes was a master of scepticism. There used to be a saying, ‘If you have four views, three of them are held by Keynes,’ but Keynes also is supposed to have said, ‘When facts change, I change.’ That means scepticism. It is not that facts change, it is just to be sceptical of whatever you do. To emphasise the answer to your question on pluralism: I teach people to be sceptical, but to learn one mathematics and one economics thoroughly with a sceptical, open mind to its generalising. That is what made Sraffa great, that is what made Brouwer great, that is what made great people yet. The moment Hilbert changed and became non-sceptical, he became dogmatic. Turing was always sceptical. He was always innocent and he was always sceptical.

KEY:

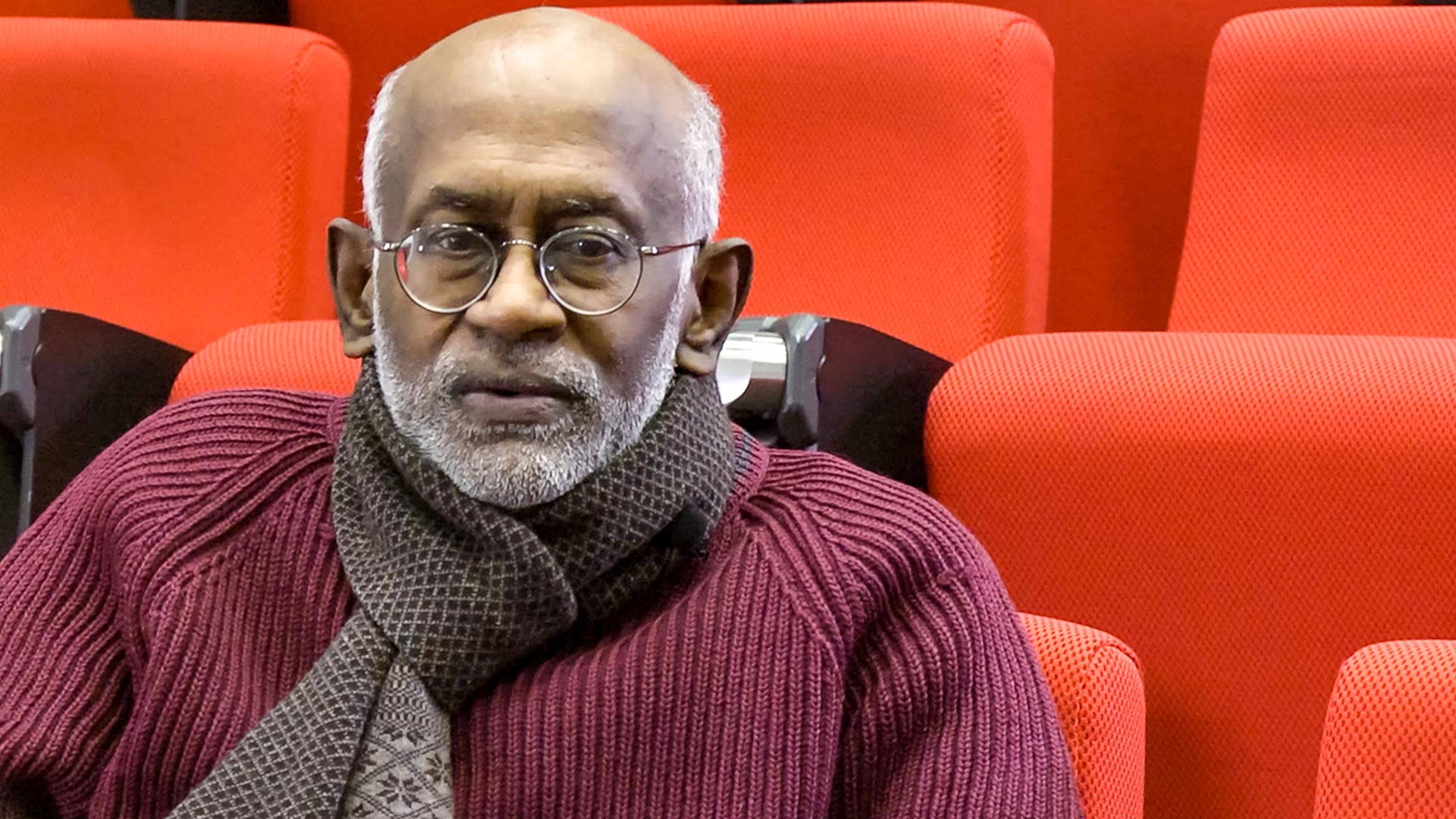

RV: Ragupathy Venkatachalam (interviewer)

KV: Kumaraswamy Vela Velupillai

RV: Welcome to Goldsmiths, Professor Velupillai. It is a great pleasure for us to have you in the IMS Goldsmith’s interview series. Velupillai has been professor of economics at different universities - University of Trento (Italy), UCLA, the European University Institute (Italy) and many other universities in Europe, Asia and the Americas, most recently at the New School for Social Research, USA. Let me start with an important area of your research which is economic dynamics. What is your view of economic dynamics and what are the important questions in this area?

KV: First of all, thank you very much for having me. To be in this interview series is an honour. I don’t pretend to be in the same class as the others you have interviewed in this category, but I am grateful for you and for Goldsmiths for having had me. Economic dynamics - I must say that I was influenced greatly by the dynamical way of looking at things by Richard Goodwin. He emphasised non-linearity, but also looked at linear dynamics. First Richard Goodwin and then Bjorn Thalberg in Lund also emphasised dynamics. Both of them emphasised also the Philips machine and cybernetics. In a way they emphasised analogue computing traditions in economics and mathematics and applied mathematics. Goodwin was an applied mathematician in one way and Thalberg in another way, but I was also influenced by Paul Samuelson’s Foundations of Economic Analysis, part 2. I came to understand most of all from Paul Samuelson’s book and from Goodwin’s lectures and teachings and Thalberg that optimisation was very difficult in dynamical systems. They never raised the question of optimality and dynamics. Strange to say, Samuelson spent most of his life on optimum allocation of scarce resources, as Robbins used to define economics with. In macroeconomics, in Foundations of Economic Analysis and emphasised by Lloyd Metzler as Samuelson’s contribution to economics is part 2. At the same time as Stigler reviewed it, there were many other reviews by leading economists - Baumol and Stigler and Roy Allen and so on, but only Metzler emphasised part 2 of the Foundations. In Samuelson’s Nobel lecture he had a section on non-maximum systems for which he took as an example the multiplier-accelerator trade cycle model. Economic dynamics was associated for me with non-optimum cycle models and this was what Goodwin emphasised all the time. Even his linear models were non-optimum cycle models, unless it was general equilibrium theory. I will come to that later on. Economic dynamics was a vehicle through which I was able to see the non-maximum allocation processes. That was important for me because dynamics was a tradition I came with from my engineering studies in mechanical engineering, particularly Hayashi’s lectures on oscillation theory and aerodynamics lectures on vibration theory. They were essentially dynamic things. I specialised in hydrodynamics, quantum dynamics, electrical dynamics, all kinds of dynamics, and it was a heartening experience to see that it was important in economics as well, but I was puzzled as well that these dynamical questions did not relate to the dynamics of engineering systems apart from electrical oscillation theory. I had learnt a little bit of electrical oscillation theory, but mostly mechanical vibration theory. When I studied and read cycle theory also under Thalberg, it impressed me that he emphasised Frisch’s work on linear dynamics and oscillation theory. Goodwin emphasised Schumpeter’s business cycle ideas in his lectures and Schumpeter had a mechanical theory of oscillation. He didn’t pay much attention to Kalecki and Tinbergen, but Frisch paid attention to Schumpeter’s mechanical theory of oscillation. That was a clock theory of oscillation. However, Frisch did not use it. Frisch only gave the example of Schumpeter’s oscillation in his model and then he took the wrong quote from Wicksell and emphasised the oscillation of a rocking horse if it is banged from outside. I have not been able to understand whether Frisch had Slutsky’s paper before or after he wrote the Cassel Festschrift article. Slutsky was at that time still not yet persona non grata, but by the time Frisch published his article in Econometrica, he was persona non grata. Frisch was proficient in statistical time-series analysis – that was his thesis as well from 1926 – and in business cycle analysis. He was a ‘faculty opponent’ – in the terminology that is more familiar, external examiner - of Johan Åkerman’s thesis in Sweden in 1927 or 28. Frisch was well equipped to understand the ARMA autoregressive moving average model of Slutsky, but I have not been able to figure out whether he read Slutsky before or after. Frisch made a model. It was a nonlinear model. When he linearised it he said that this is an oscillation model. My friend and colleague Stefano Zambelli has spent 20 or 30 years showing that Frisch’s model doesn’t oscillate. You have to knock it from outside for it to oscillate. Anyway, economic dynamics meant for me a way to bring in non-optimum dynamical questions into it, but it is only recently I have been able to analyse this.

RV You spoke about cycles and cycle theory, but there is also the question of growth, which has been important in economic dynamics as well. You point out that growth theory is different from development. Could I have your thoughts on that?

KV: I have come to realise that growth theory is mostly steady-state growth theory, which is no different from static theory. It is either steady state or stationary state. Harrod, who emphasised growth theory, knew very little dynamics. His dynamics was classical dynamics, mechanical dynamics that was of the sort that was in Ramsey’s father’s book : dynamics, statics and so on. He never went beyond that. Tinbergen pointed out to him that he can’t model dynamics with first order differential equations because first order differential equations don’t oscillate. Then he went to growth theory because he couldn’t model cycles with it, but growth theory is static. Any steady state theory can be transformed. I have come to realise that growth theory should be part of cycle theory and not the other way about. This is the distinction between Schumpeter and Hudson. Hudson’s metaphor is that cycle theory is that growth must be part of cycles, not cycles part of growth. Schumpeter had this view – i.e., cycles as an outcome of growth - and my teachers took the Schumpeter view. It is only a small step to go from there to the neoclassicals and the newclassicals to assume that there is trend growth. I don’t believe any more in trends. I think growth is higgledy-piggledy growth and it has to be analysed by itself as an outcome of cycle theory. If it is to be dynamic, growth theory has to be wedded to cycle theory. Then development theory is part of cycle theory. Development is up and down all the time. Some countries develop, some countries don’t, but all countries develop some of the time and fall at other times. This is the same with Japan. New growth theory came out of the four tigers and Japan, not for any other reason. For example, neoclassical growth theory embellished with endogenous elements was criticised by Uzawa and Nelson. They said this has nothing to do with endogenous growth theory at all – neoclassical theory. Both Nelson and Uzawa from two different points. Uzawa had become a communist by then (by the time I met him, in the early 1990s) and Nelson from an evolutionary standpoint. Nelson’s evolutionary standpoint has an algorithmic basis in it. Growth theory, development theory, cycle theory, have to be algorithmic.

RV: We will come to the algorithmic bit in a second, but before that I have a more general question. We have had, as you pointed out, an impressive arsenal of mathematical tools from dynamical systems theory: differential equations, difference equations and so on, linear, nonlinear, to encapsulate and model economic dynamics. Is there something special about economic dynamics? In other words, are these tools powerful enough to capture all the many-splendoured richness of what we understand as economic dynamics or evolution of the economic system?

KV: I remember Stigler saying about Samuelson’s Foundations of Economic Analysis that it was a book about what he knew about difference and differential equations. But Samuelson knew only a small part of difference and differential equations. For example, there were no partial differential equations. How to approximate was not part of Samuelson’s question. Economic dynamics is about approximations and algorithms. Approximations are dynamic elements and algorithms are intrinsically dynamic. Anything that is dynamic has to have an algorithmic component and an approximation component. Neither of them are available in Samuelson’s approach to economic dynamics. Goodwin knew of the approximation dogma and algorithmic dogma, but he didn’t know how to bring it into economic dynamics. Lately, in the last 20 years or so, I began to realise that economic dynamics is algorithmic and emphasises approximation, but no economic textbook emphasises algorithms and approximations in the same breath for talking about economic dynamics. Not even radical textbooks. In fact, radical textbooks do not talk about approximations at all. They talk sometimes about algorithms. Nelson and Winter’s evolutionary theory of growth is intrinsically dynamic and intrinsically algorithmic, but not about approximations. I began to realise that dynamics is approximations and algorithms. That was the reason why I went the way I did into this.

RV: You have touched upon algorithms, approximations and other mathematical concepts. Mathematics is often seen as a way to make economic reasoning and inferences precise, at least by most people. But you seem to argue that there are varieties of mathematics in your writings. What are these varieties and do economic insights depend crucially on the kind of mathematics that we choose?

KV: You asked three questions there. One is about reasonings and inferences in mathematics, whether it helps economics; one whether economics requires mathematics to make it accurate because mathematics is supposed to be accurate; and the third one is about the varieties of mathematics, whether economists have been wedded to the wrong kind of mathematics. You imply – you don’t ask – is there a right kind of mathematics? Well, first of all I can answer by saying I don’t think there is a right kind of mathematics. I don’t think orthodox economists, conventional economists, know anything of the varieties of mathematics. A simple example is Paul Samuelson, who doesn’t even know the classical mathematics that he claims to be a master of completely, but he is a sympathetic, generous person and he never mentions constructive mathematics although he does mention non-standard analysis. Still he is wedded to classical mathematics. At the other extreme, there is a graduate textbook which is used in Columbia, for example, in mathematical economics, which belittles constructive mathematics, belittles Brouwer and Heyting and their rejection of the law of the excluded middle. Now there are all kinds of mathematics that do not rely on the law of the excluded middle, tertium non datur, in infinite cases. Economists do not differentiate enough between finite arguments and infinite arguments. Accuracy depends on whether you are talking about infinite or finite. Approximation depends on whether you are talking about finite or infinite. Then it comes to the definition of infinity. For example, Turing defined potential infinite tapes, that is tapes that can be extended any amount of times. He was working with Brouwer’s definition of continuity, of infinity. Brouwer’s was potential infinity. That is what Turing mentioned. Now this doesn’t come to terms with accuracy, inference or reasoning in economics. Jevons, for example, assumed that economic reasoning needed mathematics, but he knew very little of more than ordinary mathematics. Boole developed mathematics and Bertrand Russell, Lord Russell, is on record as saying Boole invented pure mathematics and if not for Boole there would not be logicism. If not for logicism, there wouldn’t be formalism and intuitionism. If not for that, there wouldn’t be foundations of mathematics, there wouldn’t be a philosophy of mathematics. You can go back to how to model economic reasoning and inferences in economics and the power of the computer, mechanical aids to reasoning, so you go back to Babbage as well. Reasoning and inferences in economics. That is why I think that one should know one branch of mathematics well, being aware of many other branches of mathematics that are possible. I will just mention smooth infinitesimal analysis, non-standard analysis, Russian constructivism, intuitionist constructivism. You can read ordinary mathematics in any number of books, but these things you can still read the classics and understand. That means Bishop, Brouwer, Turing and so on.

RV: You speak of the influence of the Bourbakian enterprise in mathematisation of economics and that computational elements are often ignored by orthodox mathematical economics. Can you elaborate on that?

KV: I must say first thing, Robin Gandy, who was Alan Turing’s only PhD student, reviewed the first volume of Bourbaki’s book, in which he wrote it would be not constructivism that will be put in the dust heap of history as Bourbaki claimed, but it is Bourbakianism that will be put in the dust heap of history, that he predicted. Today, Bourbakianism is no longer de rigueur in economics. Bourbakianism works on axiomatics. Axiomatics is different from axioms playing a subordinate role and there are rules and so on. Axioms are different from Hilbert and Euclid. Euclid had axioms. Breaking of the parallel postulate, which is really an axiom, led to non-standard models of space, which was important in Einstein’s relativity theory – that the sum of the angles in a triangle can be greater than 180 or less than 180. Hyperbolic or non-hyperbolic spaces was important. This had to do with the breaking of the axiom. Breaking of the axioms is important. The stranglehold of axiomatic development is important. Axiomatic development in economics owes a lot to Bourbaki, although I must say that I see very little reference to Bourbaki. Not even in Debreu is there reference to Bourbaki, but there is a reference to formalism in that. Bourbakists are not formalists. They are Bourbakists. They never accepted or understood Gödel's theorem or Turing’s computability. That has permeated mathematisation of economics without computation rules, without computable rules and so on because they have followed invisibly, imperceptibly, Bourbakian methods in this. Bourbakian methods are out of date. I just have to say that Hilbert’s axiomatic development of geometry of 1899 came not out of Euclid – came out of other German workers who had done it before him, but that is an aside. Formal methods of Debreu, which have permeated economics – all economists pay lip service to general equilibrium theory, even the neo-Keynesians and of course the neoclassicals. They seem to do some kind of variety of general equilibrium theory. Therefore, they operate with Bourbakian logic and so they work with out-of-date material.

RV: By Bourbakian method, do you refer to the whole enterprise of existence-uniqueness and that way of doing economics?

KV: Yes. Bourbaki attempted to prove the existence and uniqueness and all of mathematics was developed with existence-uniqueness principles, but on the basis of axiomatic development of algebra that van der Waerden and then later on MacLane and Birkhoff made. The existence-uniqueness principle, without how to get to, for example in economics, proved equilibrium, was not part of even neoclassical economics. Even Fisher, Walras and Pareto tried to develop methods to prove how to get to an equilibrium that they could show to exist or to be unique. Fisher used hydraulic principles, Walras used stock market principles, Pareto used analogue computing principles and so on, but they didn’t know that they were doing this. They didn’t know the mathematical underpinnings of what they were doing so they believed… These people who followed them took existence-uniqueness as the principal development, partly because of von Neumann’s influence in game theory and growth theory, but von Neumann himself changed later on in life when he understood that logic only mirrors a part of human thinking. What I mean by existence-uniqueness is the lack of a method to prove a way of getting to the existence or the uniqueness of thing proved to exist.

RV: It is interesting that you point out that there seems to be a schizophrenia between proving existence and methods to compute the object that they proved to exist. Why is this wedge so important?

KV: First of all, you ask why there is a wedge between existence-uniqueness and the method to arrive at whatever is proved to exist and is unique. My answer is based on two things. This is a fault of economic method, which is mimicking uncritically a mathematics that doesn’t pay any attention to meaning. I am only paraphrasing what Bishop said in his introduction – Bishop’s book of 1967 on Foundations of Constructive Analysis. The wedge is the thing that makes computable economics, constructive economics, intuitionism, intuition in general, important in economics. It is the wedge. Orthodox conventional economists try to pretend there is no wedge here, there is no room for intuition in this, that everything can be proved and the proof is based on proving the existence and uniqueness of entities that are of interest to economists, but the entities that are of interest to economists are defined by the mathematics that they know. They assume, for example, convexity because they can’t handle non-convex entities in the mathematics they know for the existence-uniqueness proof, so they assume convexity and resource allocation is in convex models. There are minor relaxations of the convexity principle, but they are really minor. They don’t touch the wedge as such. They have no place for intuition. Turing and Brouwer - Brouwer made a virtue of intuitionism, Turing gave importance to intuitionism and that is why it is important. So did Post – Emil Post, another founder of computability theory. I think it is because of this that they – conventional economists - don’t apply computable or constructive mathematics – because they are afraid of intuitionism. This is my own interpretation. If they were not afraid of intuitionism, they would apply it, but they are afraid that it is a back door to bring in subjectivism and they don’t want any subjectivism. They are against subjectivism. They think they are objective when they talk about classical mathematical entities, but it is not objective. Classical mathematical entities are as much subjective as constructive or non-standard or smooth analytical machines. It is not the fault of mathematics that it is intuitionistic. That is part of life that intuition is made important.

RV: You touched upon computable economics. It is a field that you have single-handedly developed in many ways. Could you explain its scope and the motivations behind computable economics in a way that relates to understanding and investigating economic problems?

KV: I want to make one distinction clear. Computable economics is not computational economics and it is not recursive economics.

RV: How are they different?

KV: Recursive economics is the economics of the neoclassicals. There is a famous textbook by Sargent and Ljungqvist – I believe it has gone through three or four editions now – which is called Recursive Macroeconomic Theory. Computational economics is the foundations for agent-based economics and they claim incorrectly that it is based on computability theory. Nothing that they say is computable in any computable mathematical sense. Recursive mathematics, recursive economics is recursive because it uses sequential analysis, Kalman filtering and (Bellman’s) dynamic programming. None of these are important in computable mathematics. Computable mathematics – intuition is important, imagination is important and the intuitionism of Brouwer is important. Approximations are important, algorithms are important. None of these things are important in recursive economics. Computational mathematics tries to say that approximation is important, but it is not important. Why computable economics? Why was I attracted to computablility? Why did I define computable economics and consider it as a problem-solving apparatus for economics? I must confess that I came to computable economics after constructive economics. The path to constructive mathematics was through Russell’s autobiography, which introduced me to Wittgenstein and then learning that Wittgenstein came back to philosophy because he heard Brouwer’s lecture in Vienna in the late 1920s. Then I read Brouwer and then I went back to Russell’s Principles of Mathematics, where he talks about logicism, formalism and intuitionism. After that I became interested in intuitionism. When I read Sraffa’s book, I could not understand why it was being criticised as being imprecise because – he didn’t call it proof, he called it demonstration, for example – every demonstration was algorithmic, but I didn’t know any computable mathematics at that time. It was reading Hodges’s biography of Turing that made a difference. I should mention here that I read the book as soon as it was published in 1983 and I announced a course on Turing’s mathematics and economics. Only one person applied to come. It was a secretary and she thought it was a touring machine and not Turing machines. She applied. I gave up that course, but from 1983 I have been redefining all the entities of economics in computable terms. Then I was able to solve the problems of choice – any choice – as a computable problem posed to a Turing machine. I was astonished that these were possible. Then I saw that Kolmogorov had already defined problem solving as an approach of constructive mathematics so I put computable economics and constructive mathematics together, but I didn’t know then that Simon had done it before me. He had done it for a special case where he assumed quite correctly and as foundations for any book on logic – and he used classical logic – the completeness theorem of Gödel. Gödel first proved the completeness theorem, then he proved incompleteness. Simon chose as his space of problems the problems that were complete in the sense that they were solvable. Then he put in place what he already called limited rationality in his thesis – bounded rationality inside complete spaces, searching with heuristics, not with computable processes. Simon was trying to model the economic reasoning behind looking for solutions to problems and then he said economists can’t keep looking. They stop at some time. When they stop, he called it satisficing. They don’t look for optimal solutions, they don’t look for rational solutions. They look for computable, boundedly rational, satisficing solutions. It was a gateway for me to talk about the impossibilities of orthodox economics based on rationality which they try to solve by ad hoc means. There is more ad-hockery in orthodox economics, mathematised, than in the impossibilities shown for problems that orthodox economics poses in economics. I was interested to bring these into the fore. Even though the initial results of computable economics are about the impossibility of computing solutions to problems posed by standard economics, and that is what I emphasised in the first few years of computable economics, I now see that you can also get positive results from this. This is in a way related to Arrow’s impossibility theorem. He showed the impossibility of logically marrying four or five conditions, but very few people know or acknowledge that this was logically based and that he was a research assistant of Tarski at the time Tarski wrote his elementary book on logic. Patrick Suppes, for example, is the only one I know who emphasises this aspect of Arrow. The impossibility theorem is impossible because it is framed inside a particular kind of logic. That same thing can be said of Simon. He frames it in a particular kind of logic. He frames it as boundedly rational, agent satisfising in a particular type of logic. There are no impossibilities there. The impossibilities that are there are because this logic is different from that logic. Simon implicitly emphasises completeness. Arrow does not emphasise completeness at all. He just works on logic as such in a classical mathematical way. My computable economics was going towards problem solving, but in a classic computability sense it was about showing the impossibility of solving the problems of standard economics instead of showing them as paradoxes and anomalies, as Allais and Ellsberg did in the beginning. For example, Savage’s book says, ‘I thank Allais and Ellsberg for pointing this out and now I correct my subjective expected utility statistics,’ but he never corrects finite versus infinite, continuous versus non-continuous and so on. I don’t think I have answered your question, but this is the way I have worked.

RV: You have answered. I wanted to touch upon something that you just said about problem-solvers and problem-solving, which is an important element of your computable economics. The “agents as problem-solvers” view or metaphor seems to be in contrast with what we learn in typical graduate school textbooks, where agents are optimisers, maximisers or signal processors. Why is problem-solving a better metaphor in your view and what implications does this have on limits to rationality?

KV: You ask about problem-solving and what importance it has in economics. I would say social science in general. Let me first point out that Lucas in his Phillips lectures also talks about problem-solving, but they are quite different aspects of problem solving. Problem solving is important because that is what we have in economics and social science: problems. We have to solve them. We find methods to solve them and these methods, if they are tied to the straitjacket of rationality, equilibrium and optimisation, we can only solve a few of the problems. We have to solve the problems anyway so we have to expand the methods and expand the concepts of standard economics. That is what Simon does. I want to emphasise that irrational numbers and rational numbers and real numbers are based on the integers, quotients of integers. I will go one step further. Quotients of integers made me think of quotients of vectors. We don’t talk about quotients of vectors. Then you talk about Hamilton’s quaternions. I think there is a role for quaternions as well. Hamilton was inspired by problems that he met that he wanted to solve. This comes to grips with a question you asked earlier. You develop the mathematics to solve the problems. You don’t take the mathematics as given and then tailor the problem to suit the mathematics, to suit the solution of the mathematical principle. You tailor the mathematics. Hamilton tailored quotients of vectors. For example, complex numbers were debated for a long time before they were admitted into mathematics, but it helped in circuit theory, for example. Circuit theory was a practical theory. Practical problem solving requires mathematics to be expanded and Hamilton did so with quaternions. We haven’t used quaternions. We don’t use quotients of vectors. Take activity analysis models: it doesn’t use quotients of vectors, just takes vector analysis – at most, tensor analysis, which is a generalisation of vector analysis. I think problems are important. Problems are the guiding light of economics. Standard economics emasculates problems by the mathematics that they know or mathematics that is already developed. The danger is they will apply it, like the computational economists do, like the agent-based economists do. They will take constructive mathematics as it is and apply it to computational economics and to interpret what they call agent-based economics, whereas mathematicians expand this all the time due to real problems. For example, Per Martin-Löf’s type theory. Type theory can be linked to Russell’s definition of type theory to eliminate Gödelian and Berry-type paradoxes in mathematics from arising in set theory and set theory as a foundation for mathematics, but type theory doesn’t have to depend on any of these things. It comes from problems – Sraffa-type problems, any number of economic problems. I think problems are intrinsic to economics and social science in general. Agents individually and collectively try to solve problems. They try to mathematise it, but they develop the mathematics. Sometimes we learn about it, sometimes we don’t learn about it.

RV: You speak of method and problems developing methods. You have emphasised algorithmic and computational methods. From what I understand, computability theory is about what can and cannot be computed. It demarcates the boundary between these two. What of economic intuition that does not have a computational counterpart? In other words, I am asking: are there economic intuitions for which we cannot develop algorithms explicitly and if so, what is the epistemological status of this kind of intuition? Are they valid, are they guesswork?

KV: You define computability theory in terms of algorithms and intuitions, but I want to emphasise that it is also approximations. That is important. Approximations marry intuitions to algorithms. If you have intuitions that cannot be algorithmised, it means you have not found the right approximation yet to intuitions. Real life is about approximating intuitions so that you can write algorithms. It is true that there are intuitions that cannot be algorithmised, but pro tempore – temporarily. Once you learn deeply enough about intuitions, you can learn how to approximate it so that you can write an algorithm for it. When you can write an algorithm, then you can implement it. It is not always true that you can implement an algorithm that can be formalised, but that shows formalism’s limits as well. You asked also about method. Method is wedded a lot to formalisation, formalisation is wedded a lot to axioms, but they don’t give the rules of reasoning that go from axioms to theorems in that. Once you give a theorem, if you know the algorithm that goes from axioms to theorems then you know the proof of the theorem. That step is never taken by orthodoxy of any sort, that going from axioms to theorems stepwise and writing an algorithm. To write an algorithm, you need intuition and you need to approximate the intuition to write the algorithm. You must have an idea of what is the theorem that you can get out of this approximation using these axioms and these rules of reasoning. You can’t say reasoning is definitely going to be rational reasoning. In Moby-Dick, Captain Ahab says, ‘My methods are completely rational. It is my goals that are not rational.’ The theorems can be irrational, but his methods were rational. That means he knew how to approximate intuition.

RV: In some of your results in computable economics, you point to the uncomputability of certain equilibria in which you can’t develop algorithms. But you also show that excess demand function is algorithmically undecidable. This latter result always seemed puzzling to me because it seems to attack a central tenet of general equilibrium theory. Is that so?

KV: It is so, but remember that you ask about excess demand functions and their failure or non-failure in abstract terms, and their importance in general equilibrium theory. Excess demand functions are very important in general equilibrium theory, but none of the general equilibrium economic people acknowledge that excess demand is wedded to intuition. It is intuitionism that gives rise to the Church-Turing thesis. It is giving rise to intuitive development of effective calculability. The excess demand function is not effectively calculable in an intuitionistic sense. It may be calculable for an agent who has a different type of intuition. This intuition may be about the general tribes in Ceylon, for example, or in Brazil. Their intuition may be different. We have to learn their intuition to say that excess demand function is universally failing general equilibrium economics, but general equilibrium economics uses the excess demand function of classical mathematics, which has no place for intuition. I use the intuitive definition of effective calculability to show that in constructive or computable mathematics, excess demand functions are non-existent. It has to do with intuition. They banish intuition from general equilibrium theory. You have to admit intuition, and whose intuition is important.

RV: Can you give some examples of undecidability and uncomputability in economic theory; also examples of constructive ways of doing economics in the history of the subject?

KV: First, I will tell you that you can look at Simon to look at decidable results and Simon’s development of the Turing machine concept of algorithms. You can look at Boole and Jevons to talk about exclusive ‘or’ or inclusive ‘or’ and later development by McCulloch and Pitts of universality, universal computation in these systems of reasoning as used by Conway with surreal numbers and so on. There are many different number systems and ordinary economics uses only one type of number system. Now examples of uncomputability are the rationality postulates of standard economics. The general equilibrium of standard economics is non-constructive and uncomputable for many reasons – not only for computable reasons, but also for constructive reasons in an intuitionistic sense. Constructive reasons because the Bolzano-Weierstrass theorem is intuitionistically unacceptable and the Bolzano-Weierstrass theorem is used in every algorithm of general equilibrium theory proving the existence of equilibrium. Even the Sperner simplex, even the simplex of Scarf, uses the Bolzano-Weierstrass theorem. There is a mathematics without using the Bolzano-Weierstrass theorem. Just for clarification, the Bolzano-Weierstrass theorem is like the ‘game’ of 20 questions. You go right or left. So you can toss a coin and go right, but you don’t know whether you will reach the goal because the goal might be if you take the left turn. You assume that the goal is at the end. This is where Ahab comes in. My goals are irrational; so these goals are defined after the effect. That is why the Scarf proof is not computable nor constructive. Scarf is wrong to say that Brouwer is confined to infinite processes that must have constructive decisions with them. Those are examples like rationality and equilibria from standard economics. For the example of computable solutions, you can look at Simon. He gives umpteen examples of computable solutions, even of the proof in Whitehead and Russell of the first volume of Principia Mathematica, first eight chapters, without using Turing machine algorithms, but heuristics. Heuristics are the way human beings think. Hao Wong showed it by using algorithms. One must read Simon and his collaborator Newell’s paper on this from 1958 to know. It goes back to the Jevons-Boole controversy as well, but that is another story.

RV: Now I would like to move more to the microeconomic part. You make a distinction between classical and modern behavioural economics in your writings. Could you elaborate on what this distinction is?

KV: It is very simple. Classical behavioural economics is the kind that Simon, Nelson and Winter, and Day and that kind of people emphasise. Modern behavioural economics is the kind that comes out of the acceptance of subjective expected utility theory. That means you accept subjective probability theory of the Savage variety, the resulting subjective expected utility. None of this is accepted by the classical behavioural economists. They accept the probability theory of algorithmic probability theory, which is wedded to von Mises’s definition of patterns for randomness first and probability afterwards. Randomness is patternless; Patternless is randomness. Patternless is defined algorithmically. These people are completely algorithmic in their definitions of behavioural entities and their decision processes for solving problems. The modern behavioural economists who accept subjective expected utility and therefore subjective probability do not accept an algorithmic definition of probability theory or utility theory so they have to face Allais paradoxes and Ellsberg paradoxes which they solved logically – classical logical way. This tradition names the paradoxes as anomalies and goes on through experimental economics and modern behavioural economics of the Thaler variety. They accept all the tenets of neoclassical economics as regards utility maximisation and equilibrium search is concerned. None of this is accepted by classical behavioural economists. That is the distinction.

RV: You have been very critical of the relevance of the notion of equilibrium in economic analysis, but from Classicals onwards we have been told that equilibrium is an important concept in grounding our intuition or reasoning about economics. Is this relevant? What would non-equilibrium or a-equilibrium economics look like in that case?

KV: I want to say that Sraffa in his book of 1960 doesn’t talk about equilibrium at all. If you talk about equilibrium, you talk about resource allocation, efficient resource allocation and so on. Equity is left aside. I prefer not to have any equilibrium concept. The classical behavioural economists did not work with any equilibrium concept. They defined non-equilibrium, but I don’t work with any equilibrium at all and computability theory has no equilibrium at all. An algorithm either stops or doesn’t stop. It may not stop. Many algorithms don’t stop at all. There is a stopping rule. Some don’t stop, some circulate, some keep on going, so Emil Post defined recursively enumerable sets different from recursive sets on the basis of stopping rules of algorithm. I don’t want to use the word equilibrium or non-equilibrium or a-equilibrium, without equilibrium. Economics is algorithmic. Algorithms have no equilibrium concepts. They have stopping rules. They are made from approximations of intuitions. That is enough for me.RV: That is very interesting and this brings me to a related question, which is about methodology as well. It is on the micro foundations that seem to be quite accepted and fashionable in macroeconomics today. What are your thoughts on this? You talk about phenomenological macroeconomics and this seems to be in sharp contrast to that.KV: This is entirely my personal view – almost entirely. I think the future of micro foundations for macroeconomics depends on microeconomics. Neoclassicals and new classicals – hardcore neoclassicals and new classicals – want micro foundations. New classicals in fact think there is only microeconomics to do. This has to do with the fallacy of composition. I think in the future only macroeconomics will survive and that is because of the fallacy of composition and because microeconomics will be dissolved by the prevalence of algorithmic economics. All micro entities, all individual entities, would work with algorithms. Algorithms are intuitive, they can be approximated, but algorithmic, and therefore there will be no microeconomics to give the foundations for macroeconomics. Macroeconomics is another thing altogether. It has no micro foundations at all. It has its own foundations and the micro foundations have to do with the fallacy of composition and its failure in providing foundations for macro, but macroeconomics has its own foundations, has its own entities, and it gives problems as well – not only the agent’s problems or the so-called micro problems, but macroeconomic problems, national economic problems. Therefore, we must develop macroeconomics as much as we can, which is entirely my view. For example, in the case of cycle theory, using for example the Poincaré-Bendixson theorem, one proves the existence of cycles, but now the Poincaré-Bendixson theorem is algorithmised. Mathematics in the age of the Turing machine, as Hales calls it, gives a new life to macroeconomics. Macroeconomics is dynamic intrinsically because algorithms are dynamic. Macroeconomics has no static counterpart at all in the kind of macroeconomics I do and the kind of macroeconomics that is relevant even in Paul Samuelson’s part 2. Stability concepts will be developed as the dynamic concepts of macroeconomics are given an algorithmic content. Are these algorithms stable? Are these algorithms therefore stoppable or not stoppable? What do we do if we stop them in the middle? We approximate when we stop them in the middle of a process. All engineers, all imaginative engineers, welcomed infinite processes because they could be stopped.

RV: Because you touched upon the fallacy of composition, this reminds me of another aspect to Keynes’s work, which is related to fundamental uncertainty. Is this in the same class as the impossibility results or undecidability results that you talked about earlier? Is there a connection?

KV: I must say that I have never found the words fundamental uncertainty in Keynes’s writing. It is Minsky who made a big deal of this fundamental uncertainty. I don’t even believe there is such a thing as fundamental uncertainty. Uncertainty has to do with randomness. Randomness is number theoretic, patternless, algorithmised, so I don’t think this fundamental uncertainty has anything to do with algorithmic undecidability or algorithmic computability or algorithmic uncomputability. Fundamental uncertainty is in the same category as subjective expected utility-based probability. Neither Ramsey nor de Finetti would subscribe to fundamental uncertainty as Minsky defines it and as some of these post-Keynesians define it.

RV: I want to ask you a more general question regarding context. You describe algorithms and computational methods and so on. What is the place for specific historical and institutional elements in this framework? Do contextual elements – let’s say a specific historical time in which a society that is being analysed – do they matter?

KV: I think it matters because intuition is important. Intuition is history dependent. Whoever talks about intuition talks about intuition is a historical sense. This also Brouwer does. His concept of intuition, Heyting, Bishop, Per Martin-Löf and so on have developed because historically it is evolving, but not in a Darwinian or Mendelian sense – evolving intuition. History is very important, but not in the sense that Joan Robinson talks about history versus equilibrium. She talks about history in an ahistorical sense: history is given. History is not given. We reinterpret history all the time, so we reinterpret intuitions all the time because we reinterpret history all the time. History is important and study of history is important. Knowing history is important. The extent to which you know history and you are a master of history, you can talk about intuitions approximated to algorithms. Algorithms is history dependent.

RV: In your work you put Keynes, Sraffa, Simon, Brouwer, Goodwin and Turing together. What do you see as a single connecting thread?

KV: You talk about ASSRU (Algorithmic Social Science Research Unit) really because in that we put together Simon, Turing, Brouwer, Goodwin, Keynes and Sraffa. This is about computability, bounded rationality, satisfising, intuitionism and then the irrelevance of equilibrium in Sraffa’s work and Goodwin’s cycle theory and Keynes’s overall view of the importance of morality in daily affairs, if you like. Keynes learnt about morality in Cambridge from Russell and Moore. It was the same time. It was a time Keynes was an undergraduate and was a member of the Apostle society, but the unifying theme here is problem solving. All of them were interested in problem solving, all emphasised intuition in their work explicitly and implicitly. Brouwer emphasised it explicitly, Turing almost explicitly, Simon almost explicitly, Keynes, Sraffa and Goodwin implicitly. It is intuition that is the guiding force, but morality plays a role. All of these people had moral principles that were history dependent; not moral principles just given, but history dependent.

RV: You have talked about many of your influences throughout the interview. Could you recollect your formative years and what books or events or people had decisive influence in the unconventional path that you took?

KV: Two things from childhood come to mind. One is my father telling that he went into the kitchen of our house in the country and he found a man seated there eating. He said, ‘How could I tell him to leave the kitchen? He was hungry, he was eating.’ That made an impact on me on poverty. Then the second thing was when a man came accused of murder and he was going to be charged. He asked my father for help in appearing for him, but he didn’t have money to pay. My father chased him away, telling him to go and ask his MP, who he had worked for against my father. Then after a few minutes he asked me to bring this man back. So I realised poverty was important. Poverty is what drove him to come to my father and ask. Poverty has since then been very important for me, for poverty alleviation. This took form in Japan, where I studied, in the mathematics teacher who taught me the meaning of proof in mathematics being interested in poverty as well. It was the same when I came to economics. I was driven by the considerations for poverty by Gunnar Myrdal’s book on Asian Drama and the poverty of Asian people, but Thalberg and Goodwin were also interested in poverty. They were involved in poverty alleviation so they were important for me in poverty alleviation. So was Turing, Brouwer and Russell and Wittgenstein. All of them were involved with some kind of poverty alleviation. Poverty was a formative concern, but as for teachers and mathematics and proof and economics and so on, in high school l had a very good teacher of mathematics who taught me physics, applied mathematics, pure mathematics and advanced mathematics, in all of which I did well at the school leaving exam. He taught what proof was and what axioms were. This was continued in Kyoto as an undergraduate by the mathematics teacher. When I came to economics, I was astonished at people talking of proof without knowing what a proof was, especially the general equilibrium theories, whereas Goodwin and Thalberg did not prove anything. I came to realise that proof was important and I started reading on proof, books and ideas on proof in mathematics and proof in general. That is the thing that led me to Russell’s autobiography, Wittgenstein and Brouwer and so on, and Keynes. I still remember that I bought Keynes long before I understood what Keynes was saying in the General Theory. I bought the General Theory in 1967 when I was an engineering student, but I didn’t understand anything. Anyway, coming back to your question: books and people who were important for me. The books that were important were Keynes’s General Theory, Sraffa’s Production of Commodities by Means of Commodities, Samuelson’s Foundations of Economic Analysis. I would class these three as in a different class, especially part 2 of Samuelson’s Foundations of Economic Analysis and the books of Brouwer, particularly on intuitionism. His books came later, usually papers, but I was guided to this with Russell and Wittgenstein. Wittgenstein only wrote one book in his lifetime and one other book he prepared, but many other books have been published and his lectures have been published long before I reached adulthood. From this, I understood about constructivism and intuitionism. The books and the formative ideas were these three books on economics and books on constructive mathematics through philosophy and then computable economics through the papers of Turing – latterly also of Post. I didn’t know of Post’s work originally, but I came to realise that Post was important. Then later on – much later on – I came to realise Simon’s importance in this and Simon’s books. There is a lot of repetition in Simon. He published papers over and over again, but you can distil Simon’s ideas into three or four books and papers. The most important book of Simon is his autobiography, in which he summarises most of the concepts that came. Then you can go backwards to some of the papers. You don’t have to read, for example, the 1972 big book with Newell on human problem solving. Human problem solving was the important thing for him. It was how to model human problem solving that led to bounded rationality. Humans were solving the problems, organisations were solving these problems and his organisation theory is different from the organisation theory of Arrow, for example. Arrow’s limits to organisation is limits coming from the particular mathematics of general equilibrium theory. Simon’s organisation theory is coming from boundedly rational satisficing organisations. My formative influences were my mathematics teachers, my economics teachers and the books in mathematics, philosophy and economics. They can be counted in the fingers of two hands at most.

RV: You spoke about varieties of mathematics and you touch upon many different traditions and varieties of economics. You have had the experience of teaching in many different universities and different continents. How should one teach, in your opinion, economics in a plural fashion? Is there a right economics to teach?

KV: My answer is this: you mentioned that I spoke about variety of mathematics and variety of economics. I think there are only two types of economics, but there are many types of mathematics. Finite, but many types. They grow. Some kinds of mathematics die, but only two types of economics: classical and neoclassical. The neoclassical model requires full employment, equilibrium, rationality and all the paraphernalia of this. Classical methods do not require any of these concepts. There can be unemployment, there can be no equilibrium and so on. Classical economics was subverted by neoclassical economics in the name of continuity and in the name of generalising. They did not generalise. They mathematised neoclassical economics and for the mathematisation they chose one type of mathematics and closed their eyes to all other types of mathematics. When I teach, I teach students first of all to have an open mind; secondly, to master one kind of mathematics and one kind of economics, but with an open mind to be sceptical about what they master. Scepticism is the mother of pluralism in my opinion. I don’t teach pluralistic economics, I don’t advocate pluralism, but I advocate scepticism, which is one step ahead or above pluralism. To be sceptical of economics and mathematics is a healthy attitude. To be sceptical in general is a healthy attitude. It is because some of these people were sceptical of conventional modes of thought that they were able to extend, generalise and use other types of thought and way of reasoning and so on. This is true of the people like Brouwer and this is true of economics people like Keynes. Keynes was a master of scepticism. There used to be a saying, ‘If you have four views, three of them are held by Keynes,’ but Keynes also is supposed to have said, ‘When facts change, I change.’ That means scepticism. It is not that facts change, it is just to be sceptical of whatever you do. To emphasise the answer to your question on pluralism: I teach people to be sceptical, but to learn one mathematics and one economics thoroughly with a sceptical, open mind to its generalising. That is what made Sraffa great, that is what made Brouwer great, that is what made great people yet. The moment Hilbert changed and became non-sceptical, he became dogmatic. Turing was always sceptical. He was always innocent and he was always sceptical.

RV: I have had the good fortune of attending your lectures as a graduate student and you have always had a strong history of thought component in your lectures, use a lot of puzzles and toys and so on. What role does history of thought play in economics? I ask this because in sciences where mathematics has a dominant role usually pay very little attention to history of the subject. Physics is an example, but there are exceptions. Is there any reason why we need to teach students history of thought?

KV: You give the example that in physics one doesn’t teach the history of physics, in my opinion as much as one should teach. Let me first say history of thought is important because I view history, everything, as a tree. The tree is something in which you can walk backwards and forwards. History of thought is to walk backwards in the tree and to find nodes where you could have taken a certain path that you didn’t take. It is like the Bolzano-Weierstrass theorem, but you can go back in time. Therefore, students to whom I teach economics, asking them to master one kind of economics, I also teach the history or try to emphasise the history of thought from a tree perspective of going back and finding alternative paths that could have been taken, but were not taken. Why were they not taken is a question that the student must ask, but the teacher’s role is to point out that these were not taken and give his or her view of why it was not taken. My own opinion is based on mathematics, that it is not taken because this history of thought that they go back to, they go back to classical mathematics. But in the case of physics, I am not sure I agree with you that they don’t teach the history of thought. In the case of physics, proof is not important. It is workability that is important, but when one talks about important concepts in physics like the Feynman diagrams or the Dirac delta function, then one goes back and tries to find out in what sense is the Dirac delta function a function of the fact that he was an electrical engineer and he was first taught electrical oscillation theory – this is Dirac – and that he developed the idea of the Dirac delta function from electrical oscillation theory. Feynman diagrams, you have to go back to proofs and the importance of proofs. You can’t axiomatise the Feynman diagrams and the Feynman principle. What is the role of axioms? What is the role of proof? Feynman went back to try to see how Newton, for example, proved. Newton used geometric methods to prove. Chandrasekar from astronomy went back to Newton and Galileo to try to understand their proof techniques. What proof techniques do we use now to make these things workable? Feynman first off made the diagrams so that they worked in quantum electrodynamics for solving certain problems, mind you. Then he wondered why he was not able to axiomatise this and he went back to history. Is it because I looked for workability without looking for proofs? How did Newton prove this? In fact, Newton used non-standard analysis in his infinitesimal calculus. Anyway, that is beside the point. I think history of thought is important because you go back all the time and you have to teach the history of thought as the path in a tree that is travelable both up and down, sideways and all directions.

RV: What is your advice for young economists who are starting their career or students of economics who are in their universities?

KV: I don’t think I am equipped to give them advice on this. I can only outline the path that I took and where I have ended up. I can now tell I have ended up with more scepticism, more questions than I began with. That is where you have to end up, I think: with more questions and much more scepticism about methods and proofs and axioms and epistemology in general.

(End of recording)